Dogs vs. cats challenge [1] from Kaggle ended in Jan 2014 but it is still extremely popular for getting started in deep learning. This is because of two main reasons: the data set is small (25,000 images taking up about 600MB), and it is relatively easy to get a good score.

There are many many online articles discussing on how pre-process data , design a CNN model and finally train the model. So, in this post I am not going to discuss the implementation details. Instead, I am simply going to report my results using a custom designed model and transfer learning. I used Tensorflow and tf.keras with Python and it is available from my Exploring Deep Learning repository [2] at Github.

Learning using a Custom Model

Note that this is my best attempt and not the first attempt. I used four blocks of 2D convolution layers followed by max pooling. In the end, I used two dense layers and a softmax layer as output. I also used dropout layers and image augmentation. The exact command line for training this model is:

TrainCNN.py --cnnArch Custom --classMode Categorical --optimizer Adam --learningRate 0.0001 --imageSize 224 --numEpochs 30 --batchSize 16 --dropout --augmentation --augMultiplier 3The CNN model is given below:

--------------------------------------------------------------- Model: "Custom" --------------------------------------------------------------- Layer (type) Output Shape Param # conv2d (Conv2D) (None, 224, 224, 32) 896 max_pooling2d (MaxPooling2D) (None, 112, 112, 32) 0 conv2d_1 (Conv2D) (None, 112, 112, 64) 18496 max_pooling2d_1 (MaxPooling2 (None, 56, 56, 64) 0 conv2d_2 (Conv2D) (None, 56, 56, 128) 73856 max_pooling2d_2 (MaxPooling2 (None, 28, 28, 128) 0 conv2d_3 (Conv2D) (None, 28, 28, 256) 295168 max_pooling2d_3 (MaxPooling2 (None, 14, 14, 256) 0 flatten (Flatten) (None, 50176) 0 dense (Dense) (None, 512) 25690624 dense_1 (Dense) (None, 256) 131328 dense_2 (Dense) (None, 2) 514 =============================================================== Total params: 26,210,882 Trainable params: 26,210,882 Non-trainable params: 0 ---------------------------------------------------------------

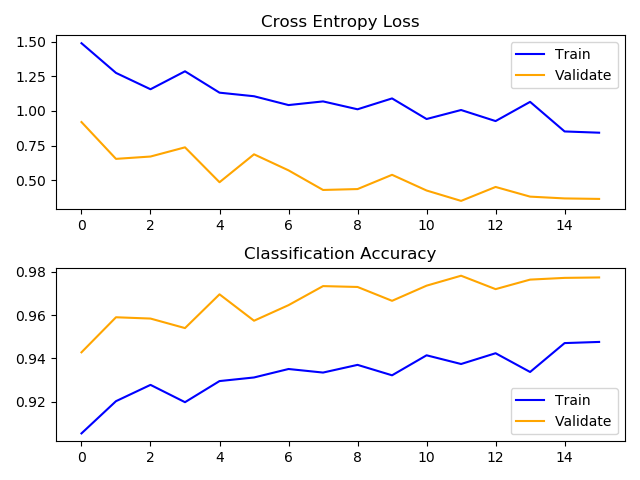

The above model was trained on 15,000 (7,500 each for dogs and cats) randomly chosen images from the Kaggle data set and validated with a separate 5,000 (2,500 each for dogs and cats) images. The model achieved 94% accuracy after 24 epochs. It took about 4 hours of training on my PC with NVidia GeForce GTX 1050 with 2GB of RAM.

accuracy for training and validation using a custom CNN model.

Transfer Learning using VGG16 Model

For the second part, I used the VGG16 model with imagenet weights without the top layer and a custom denser layers at the end. Similar to the previous step, I used dropout layers and image augmentation. The exact command line for training this model is:

TrainCNN.py --cnnArch VGG16 --classMode Categorical --optimizer Adam --learningRate 1e-5 --imageSize 224 --numEpochs 30 --batchSize 25 --dropout --augmentation --augMultiplier 3The CNN model is given below:

--------------------------------------------------------------- Model: "VGG16" --------------------------------------------------------------- Layer (type) Output Shape Param # =============================================================== vgg16 (Model) (None, 7, 7, 512) 14714688 flatten (Flatten) (None, 25088) 0 dense (Dense) (None, 512) 12845568 dense_1 (Dense) (None, 256) 131328 dense_3 (Dense) (None, 2) 514 =============================================================== Total params: 27,692,104 Trainable params: 12,977,410 Non-trainable params: 14,714,688 ---------------------------------------------------------------

The above model was trained on the same dataset as the custom model above and it achieved an accuracy of 98% after 11 epochs. Clearly, this model is far more efficient and more accurate then the custom designed model.

for training and validation using a transfer learning from VGG16 model.